Preprint Analysis#

How to get all preprints of a topics?#

We use OpenAlex to retrieve all articles which are a preprint, but have so far not been published by a peer-reviewed journal.

Load libraries#

!pip install pandas

!pip install pyalex

!pip install matplotlib

Requirement already satisfied: pandas in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (2.1.4)

Requirement already satisfied: numpy<2,>=1.23.2 in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (from pandas) (1.26.4)

Requirement already satisfied: python-dateutil>=2.8.2 in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (from pandas) (2.8.2)

Requirement already satisfied: pytz>=2020.1 in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (from pandas) (2023.3.post1)

Requirement already satisfied: tzdata>=2022.1 in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (from pandas) (2023.3)

Requirement already satisfied: six>=1.5 in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (from python-dateutil>=2.8.2->pandas) (1.16.0)

Requirement already satisfied: pyalex in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (0.15.1)

Requirement already satisfied: requests in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (from pyalex) (2.31.0)

Requirement already satisfied: urllib3 in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (from pyalex) (2.0.7)

Requirement already satisfied: charset-normalizer<4,>=2 in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (from requests->pyalex) (2.0.4)

Requirement already satisfied: idna<4,>=2.5 in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (from requests->pyalex) (3.4)

Requirement already satisfied: certifi>=2017.4.17 in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (from requests->pyalex) (2024.8.30)

Requirement already satisfied: matplotlib in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (3.8.0)

Requirement already satisfied: contourpy>=1.0.1 in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (from matplotlib) (1.2.0)

Requirement already satisfied: cycler>=0.10 in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (from matplotlib) (0.11.0)

Requirement already satisfied: fonttools>=4.22.0 in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (from matplotlib) (4.25.0)

Requirement already satisfied: kiwisolver>=1.0.1 in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (from matplotlib) (1.4.4)

Requirement already satisfied: numpy<2,>=1.21 in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (from matplotlib) (1.26.4)

Requirement already satisfied: packaging>=20.0 in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (from matplotlib) (23.1)

Requirement already satisfied: pillow>=6.2.0 in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (from matplotlib) (10.2.0)

Requirement already satisfied: pyparsing>=2.3.1 in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (from matplotlib) (3.0.9)

Requirement already satisfied: python-dateutil>=2.7 in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (from matplotlib) (2.8.2)

Requirement already satisfied: six>=1.5 in /home/natalie-widmann/anaconda3/lib/python3.11/site-packages (from python-dateutil>=2.7->matplotlib) (1.16.0)

from pyalex import Works, Authors, Sources, Institutions, Concepts, Publishers, Funders

from itertools import chain

import pandas as pd

import pyalex

import os

Define helper functions#

# Determine if any of the locations (journals) the paper is already published

def is_any_location_published(locations):

for location in locations:

if location['version'] == 'publishedVersion':

return True

return False

# Combine all authos

def join_authors(list_of_authors):

return ', '.join([author['author']['display_name'] for author in list_of_authors])

# Extract key information from the locations

def join_locations(list_of_locations):

summary = []

for location in list_of_locations:

if location['source']:

summary.append(f"{location['version']}: {location['source']['host_organization_name']} - {location['landing_page_url']}")

else:

summary.append(f"{location['version']} - {location['landing_page_url']}")

return ', '.join(summary)

def get_journal(primary_location):

source = primary_location.get('source', {})

if source:

return source.get('display_name', '')

return

Set the Topic & Year#

Set the year and the number of papers you want to obtain

# Variables reduce the size of the output and the time required for execution

topic = 'COVID'

year = None

n_max = 500 # when set to None all papers are queried

Get the preprints#

Run te following code to get the preprints for the specified parameters

# Query the

if year:

query = Works().search(topic).filter(type="article", publication_year=year, primary_location={'version': 'submittedVersion'}, locations={'is_published': False}).sort(cited_by_count="desc")

else:

query = Works().search(topic).filter(type="article", primary_location={'version': 'submittedVersion'}, locations={'is_published': False}).sort(cited_by_count="desc")

preprints = []

keys = ['id', 'title', 'publication_date', 'doi', 'cited_by_count', 'language']

# Iterate over all query results

for item in chain(*query.paginate(per_page=200, n_max=n_max)):

# Based on the published locations determine if it is a real preprint

locations_count = item.get('locations_count', None)

locations = item.get('locations', None)

# Only append the paper to the preprints if is not published in any other journal

if locations_count == 1 or not is_any_location_published(locations):

# get all relevant properties

properties = {key: item.get(key, None) for key in keys}

# include joined authors and locations

properties.update({'authors': join_authors(item['authorships']),

'locations': join_locations(item['locations']),

'journal': get_journal(item.get('primary_location', {})),

'location_count': locations_count})

preprints.append(properties)

Process & store the data#

df = pd.DataFrame.from_dict(preprints)

# Compute publication year

df['publication_year'] = df['publication_date'].str.split('-').str.get(0)

df

| id | title | publication_date | doi | cited_by_count | language | authors | locations | journal | location_count | publication_year | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | https://openalex.org/W3013515352 | COVID-19 Screening on Chest X-ray Images Using... | 2020-03-27 | None | 410 | en | Jianpeng Zhang, Yutong Xie, Yi Li, Chunhua She... | submittedVersion - https://europepmc.org/artic... | None | 1 | 2020 |

| 1 | https://openalex.org/W3032488597 | Urban nature as a source of resilience during ... | 2020-04-17 | https://doi.org/10.31219/osf.io/3wx5a | 235 | en | Karl Samuelsson, Stephan Barthel, Johan Coldin... | submittedVersion - https://doi.org/10.31219/os... | None | 3 | 2020 |

| 2 | https://openalex.org/W3009111036 | Clinical Pathology of Critical Patient with No... | 2020-02-27 | None | 196 | en | Weiren Luo, Hongjie Yu, Jizhou Gou, Xiaoxing L... | submittedVersion - http://rucweb.tsg211.com/ht... | None | 1 | 2020 |

| 3 | https://openalex.org/W3016112654 | The COVID-19 risk perception: A survey on soci... | 2020-03-21 | https://doi.org/10.17632/wh9xk5mp9m.3 | 160 | en | Toan Luu Duc Huynh | submittedVersion: Springer Nature - https://da... | Economics bulletin | 1 | 2020 |

| 4 | https://openalex.org/W3021418304 | Inequality in homeschooling during the Corona ... | 2020-04-30 | https://doi.org/10.31235/osf.io/hf32q | 156 | en | Thijs Bol | submittedVersion - https://doi.org/10.31235/os... | None | 3 | 2020 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 541 | https://openalex.org/W3092726800 | Buffer use and lending impact | 2020-10-19 | None | 7 | en | Marcin Borsuk, Katarzyna Budnik, Matjaž Volk | submittedVersion - https://ideas.repec.org/a/e... | None | 1 | 2020 |

| 542 | https://openalex.org/W3092891190 | U.S. COVID-19 Policy Affecting Agricultural Labor | 2020-01-01 | None | 7 | en | Derek Farnsworth | submittedVersion: Agricultural & Applied Econo... | Choices | 1 | 2020 |

| 543 | https://openalex.org/W3093222147 | Infection Rates from Covid-19 in Great Britain... | 2020-05-22 | https://doi.org/10.31235/osf.io/84f3e | 7 | en | Hill Kulu, Peter Dorey | submittedVersion - https://doi.org/10.31235/os... | None | 4 | 2020 |

| 544 | https://openalex.org/W3093267543 | The Corona recession and bank stress in Germany | 2020-01-01 | None | 7 | en | Reint Gropp, Michael Koetter, William McShane | submittedVersion - https://www.econstor.eu/bit... | None | 1 | 2020 |

| 545 | https://openalex.org/W3094110014 | Contributing Factors to Personal Protective Eq... | 2020-01-01 | None | 7 | en | Jennifer Cohen, Yana van der Meulen Rodgers | submittedVersion: None - https://ideas.repec.o... | MPRA Paper | 1 | 2020 |

546 rows × 11 columns

# Store data as CSV file

os.makedirs('./results', exist_ok=True)

df.to_csv(f'./results/openalex_preprints_{year}_{n_max}.csv')

Get a sample paper#

paper = df.iloc[0]

paper

id https://openalex.org/W3013515352

title COVID-19 Screening on Chest X-ray Images Using...

publication_date 2020-03-27

doi None

cited_by_count 410

language en

authors Jianpeng Zhang, Yutong Xie, Yi Li, Chunhua She...

locations submittedVersion - https://europepmc.org/artic...

journal None

location_count 1

publication_year 2020

Name: 0, dtype: object

Basic data analysis#

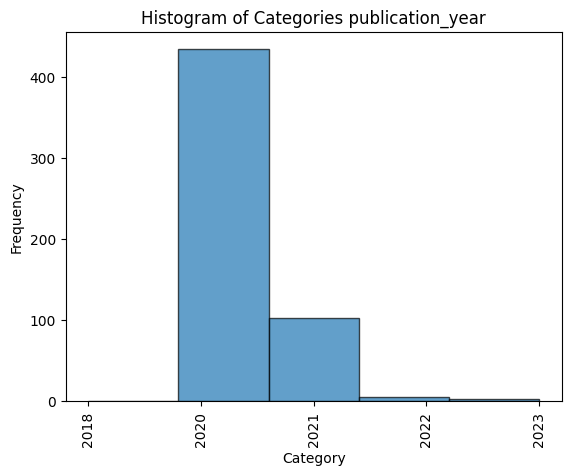

How many preprints were published in each year?#

import matplotlib.pyplot as plt

def plot_histogram(col, n=20):

df['category'] = df[col].astype('category').cat.codes

# Plot the histogram

plt.hist(df['category'], bins=len(df[col].unique()), edgecolor='black', alpha=0.7)

# Adding labels and title

plt.xlabel('Category')

plt.ylabel('Frequency')

plt.title(f'Histogram of Categories {col}')

# Adding x-ticks with original category names

categories = df[col].astype('category').cat.categories

plt.xticks(ticks=range(len(categories)), labels=categories, rotation=90)

df['publication_year'].value_counts()[:20]

publication_year

2020 434

2021 103

2022 5

2023 3

2018 1

Name: count, dtype: int64

plot_histogram('publication_year')

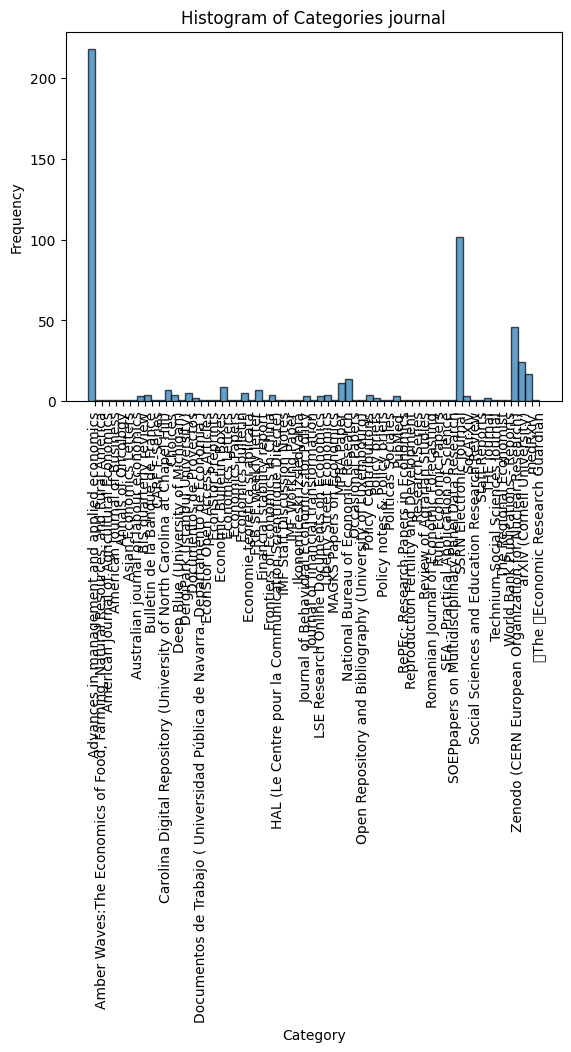

Which journals have published the most preprints?#

df['journal'].value_counts()[:20]

journal

SSRN Electronic Journal 102

Zenodo (CERN European Organization for Nuclear Research) 46

arXiv (Cornell University) 24

medRxiv 17

National Bureau of Economic Research 14

MPRA Paper 11

Economic Bulletin Boxes 9

FRB SF weekly letter 7

Carolina Digital Repository (University of North Carolina at Chapel Hill) 7

Economics bulletin 5

DergiPark (Istanbul University) 5

Policy Contributions 4

BIS quarterly review 4

Liberty Street Economics 4

Frontiers of Economics in China 4

Choices 4

SocArXiv 3

PubMed 3

Journal of Behavioral Economics for Policy 3

LSE Research Online Documents on Economics 3

Name: count, dtype: int64

plot_histogram('journal')

/home/natalie-widmann/Dokumente/Datenjournalismus/InvestigatingScience/research_recipes/.venv/lib/python3.12/site-packages/IPython/core/events.py:82: UserWarning: Glyph 152 (\x98) missing from font(s) DejaVu Sans.

func(*args, **kwargs)

/home/natalie-widmann/Dokumente/Datenjournalismus/InvestigatingScience/research_recipes/.venv/lib/python3.12/site-packages/IPython/core/events.py:82: UserWarning: Glyph 156 (\x9c) missing from font(s) DejaVu Sans.

func(*args, **kwargs)

/home/natalie-widmann/Dokumente/Datenjournalismus/InvestigatingScience/research_recipes/.venv/lib/python3.12/site-packages/IPython/core/pylabtools.py:170: UserWarning: Glyph 152 (\x98) missing from font(s) DejaVu Sans.

fig.canvas.print_figure(bytes_io, **kw)

/home/natalie-widmann/Dokumente/Datenjournalismus/InvestigatingScience/research_recipes/.venv/lib/python3.12/site-packages/IPython/core/pylabtools.py:170: UserWarning: Glyph 156 (\x9c) missing from font(s) DejaVu Sans.

fig.canvas.print_figure(bytes_io, **kw)